🌟 Introduction

Among supervised machine learning algorithms, Decision Trees and Random Forests are widely used because they balance interpretability, flexibility, and strong performance.

- Decision Trees are simple, visual, and easy to explain

- Random Forests build on trees to deliver robust, high-accuracy models

They are extensively used in finance, healthcare, retail, agriculture, and fraud detection, making them must-know models for any analytics professional.

🌳 Decision Tree Model

📌 What is a Decision Tree?

A Decision Tree is a supervised learning algorithm that predicts outcomes by learning if–then rules from data.

It recursively splits the data based on feature values to form a tree-like structure.

- Works for classification and regression

- Non-parametric (no distributional assumptions)

- Highly interpretable

🧩 Structure of a Decision Tree

Key components:

- Root Node – first split

- Internal Nodes – decision points

- Branches – outcomes of a test

- Leaf Nodes – final prediction

🧮 How Does a Decision Tree Learn?

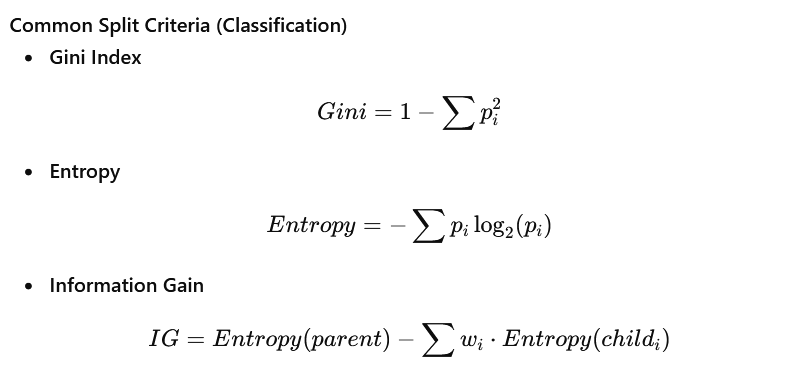

At each node, the algorithm chooses the best feature to split the data using impurity measures.

Common Split Criteria (Classification)

Regression Trees

- Use Mean Squared Error (MSE) or variance reduction

📊 Example: Loan Approval (Classification)

Features:

- Income

- Credit Score

- Employment Status

Rule-based outcome:

IF Credit Score > 700

AND Income > ₹5,00,000

→ Loan Approved

ELSE

→ Loan Rejected

📌 This transparency makes decision trees popular in regulated industries.

✅ Advantages of Decision Trees

✔ Easy to interpret and visualize

✔ Handles non-linear relationships

✔ Works with categorical & numerical data

✔ Minimal preprocessing

❌ Limitations

✘ Overfitting (deep trees)

✘ Sensitive to small data changes

✘ Lower accuracy compared to ensembles

🌲 Random Forest Model

📌 What is a Random Forest?

A Random Forest is an ensemble learning method that builds multiple decision trees and combines their predictions.

Instead of relying on one tree, Random Forests rely on the wisdom of many trees.

🧩 How Random Forest Works

Key ideas:

- Bootstrap Sampling – each tree trains on a random subset of data

- Feature Randomness – each split considers only a random subset of features

- Aggregation

- Classification → Majority voting

- Regression → Average prediction

🧮 Why Random Forests Are Powerful

- Trees are decorrelated

- Overfitting is reduced

- High accuracy on complex data

📊 Example: Customer Churn Prediction

Dataset:

- Tenure

- Monthly Charges

- Service Type

- Complaints

Each tree:

- Learns different patterns

- Makes its own churn prediction

Final output:

Churn = Majority vote of all trees

📌 Used widely in telecom and subscription businesses.

📈 Feature Importance in Random Forest

Random Forests provide feature importance scores, helping answer:

- Which variables matter most?

- What drives predictions?

📌 Example:

| Feature | Importance |

|---|---|

| Monthly Charges | 0.42 |

| Tenure | 0.31 |

| Complaints | 0.19 |

| Contract Type | 0.08 |

🔁 Decision Tree vs Random Forest

| Aspect | Decision Tree | Random Forest |

|---|---|---|

| Interpretability | Very High | Medium |

| Overfitting | High | Low |

| Accuracy | Moderate | High |

| Computation | Fast | Slower |

| Use case | Rule extraction | Prediction performance |

🧪 Simple Python Example

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

# Decision Tree

dt = DecisionTreeClassifier(max_depth=4)

dt.fit(X_train, y_train)

# Random Forest

rf = RandomForestClassifier(n_estimators=200)

rf.fit(X_train, y_train)

🌍 Real-World Applications

| Industry | Use Case |

|---|---|

| Finance | Credit scoring, fraud detection |

| Healthcare | Disease risk prediction |

| Retail | Demand forecasting |

| Agriculture | Crop yield & disease prediction |

| Manufacturing | Defect detection |

| Marketing | Customer segmentation |

⚠️ Common Pitfalls

- Not pruning decision trees

- Ignoring class imbalance

- Using too few trees in Random Forest

- Over-interpreting feature importance as causality

🧾 Key Takeaways

✔ Decision Trees are interpretable and intuitive

✔ Random Forests are robust and high-performing

✔ Trees explain, forests predict

✔ Ensemble methods reduce variance

📚 References & Further Reading

- Breiman, L. (2001). Random Forests. Machine Learning Journal.

- Quinlan, J. R. (1986). Induction of Decision Trees. Machine Learning.

- Hastie, Tibshirani & Friedman. The Elements of Statistical Learning. Springer.

- Géron, A. (2022). Hands-On Machine Learning. O’Reilly.

- scikit-learn Documentation – Tree Models

https://scikit-learn.org/stable/modules/tree.html - Kaggle Learn – Decision Trees & Random Forests

Leave a comment